I’m monitoring my infrastructure for years with nagios and it’s clones (Shinken Monitoring with Thruk as UI in most cases). I was often looking for a better replacement but always came back to my old solution. I had a look to Icinga 2 but for my taste it’s a bit over-engineered for what it does.

I also checked if I could use Prometheus for my monitoring needs, especially because I like the metrics based monitoring approach. Rather than defining checks and triggers which might send additional metrics, in this concept metrics are collected and the triggers are applied on these metrics. This brings a lot advantages, for example that alerting rules are defined in the monitoring system, not in the monitored system.

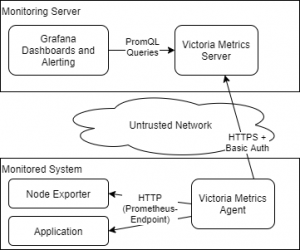

The only drawback for me was that Prometheus wants to „scrape“ the applications, requiring direct access to the metrics endpoint. That means I’d either had to expose these endpoints to the web or run Prometheus on each of my systems. Since I’d like to stay with a centralized monitoring solution and I cannot expose each system, neither was an option to me.

A while ago I was looking around for an agent that collects and sends metrics to a centralized Prometheus instance. I stumbled across Victoria Metrics which has exactly the agent I was looking for – and many more in addition. So I decided to base my new monitoring solution on it.

The architecture of my monitoring solution

The architecture of my new monitoring system will be quite simple. The central element is the Victoria Metrics Server. This is a Prometheus compatible time series database which will run on my central monitoring server. A replicated HA setup is also possible but for my private needs, a single installation will do it. I will later add an Uptime Robot check to ensure that the monitoring itself is available.

The Victoria Metrics Server data ingestion endpoint will be protected by HTTPS and an additional Basic Authentication.

On each system I’d like to monitor, I will install Prometheus‘ „Node Exporter“, an application that provides basic system metrics like CPU and disk usage statistics. The Victoria Metrics Agent will be used to collect these metrics and send it to the central server.

On the server, I will use Grafana to visualize the metrics and alert on it. In a later phase I maybe use more sophisticated alerting tools (e.g. vmalert from Victoria Metrics and/or AlertManager from Prometheus).

Setting up the Server

I decided to create a docker-composed based installation. Usually I run my workloads on Kubernetes but since I want to monitor the cluster itself, It seems to be a good ides to have the Monitoring Server outside of it.

I have already a machine with docker-compose where I run Traefik and a Rancher 2 Server. So I will add the Monitoring Server here.

version: "3.5"

networks:

services:

external:

name: services

services:

grafana:

image: grafana/grafana:7.4.3

networks:

- services

restart: unless-stopped

volumes:

- ./grafana-data:/var/lib/grafana

labels:

traefik.enable: "true"

traefik.http.routers.grafana.rule: Host(`mon.example.com`)

traefik.http.routers.grafana.tls.certresolver: default

environment:

GF_LOG_MODE: console

GF_SECURITY_ADMIN_USER: user@example.com

# initial passwort - change on 1st login!

GF_SECURITY_ADMIN_PASSWORD: s3cr3t!

links:

- vmserver

vmserver:

image: victoriametrics/victoria-metrics:v1.54.1

networks:

- services

restart: unless-stopped

command: |

-retentionPeriod=24

-selfScrapeInterval=30s

volumes:

- ./victoria-metrics-data:/victoria-metrics-data

Important: the folder „grafana-data“ must be chowned to the UID 472 (chown 472 grafana-data) in order to start Grafana properly.

Log into Grafana via https://mon.example.com, using the credentials from the Dockerfile. First action should be to change the password!

Then go to DataSources and add a new Prometheus DataSource with the URL http://vmserver:8428. After 30 seconds the first metrics should appear on the „Explore“ view.

Monitoring the local node

To monitor a node or services, three steps must be taken. 1st, the Victoria Metrics Agent needs to be set-up and configured to send it’s metrics to the metrics server. 2nd, Prometheus metrics endpoints need to be configured which provides some metrics. And finally, the agent needs to be configured to scrape the metric endpoints.

To configure the agent, either a static configuration file or some auto discovery can be used. While Victoria Metrics Agent (as well as Prometheus) provides auto discovery for many environments like Kubernetes or Docker Swarm, none of them has auto discovery for docker. Fortunately, there’s the 3rd party tool called prometheus-docker-sd which brings this functionality. Here’s how to set it up:

version: "3.5"

networks:

services:

external:

name: services

services:

vmagent:

image: victoriametrics/vmagent:v1.54.1

networks:

- services

restart: unless-stopped

volumes:

- ./prometheus-docker-sd:/prometheus-docker-sd:ro

entrypoint:

- /bin/sh

- -ce

- |

cat << EOF > /prometheus.yaml

scrape_configs:

- job_name: 'service_discovery'

file_sd_configs:

- files:

- /prometheus-docker-sd/docker-targets.json

EOF

exec /vmagent-prod \

-remoteWrite.url=http://vmserver:8428/api/v1/write \

-promscrape.config=/prometheus.yaml

-promscrape.fileSDCheckInterval=30s

prometheus-docker-sd:

image: "stucky/prometheus-docker-sd:latest"

networks:

- services

restart: unless-stopped

volumes:

- /var/run/docker.sock:/var/run/docker.sock

- ./prometheus-docker-sd:/prometheus-docker-sd

node-exporter:

image: prom/node-exporter:v1.1.1

networks:

- services

restart: unless-stopped

container_name: node_exporter

command:

- '--path.rootfs=/host'

- '--collector.filesystem.ignored-fs-types=tmpfs'

network_mode: host

pid: host

volumes:

- '/:/host:ro,rslave'

labels:

prometheus-scrape.enabled: "true"

prometheus-scrape.port: "9100"

prometheus-scrape.metrics_path: /metrics

prometheus-scrape.hostname: "172.17.0.1"

Lines 8-29 configures the agent. It mounts the folder prometheus-docker-sd which will contain the configuration file created by the auto discovery service. The entrypoint is extended by commands to create a config file that reads configuration from that folder.

In lines 31-38 the auto discovery service is set up. It will scan running docker containers for special labels and create a scrape configuration from it.

In lines 40-57 the node exporter is started. It will have access to the host’s network and process namespace and all mounted filesystems to gather metrics from it. The labels tells prometheus-docker-sd hot to scrape these metrics. Since the container uses the host network and has no own IP address, I use the docker0 interface address as target.

Shortly after deploying these services, new metrics, prefixed by „node_“ appear on grafana.

Adding visualizations

In grafana, add a new dashboard. Add a panel to it and enter the following query:

100-node_filesystem_free_bytes/node_filesystem_size_bytes*100

This will print the used disk space in percent for each device on each monitored node. In the „legend“ field, the labeling can be customized. A good start would be „{{container_id}}:{{ mountpoint }}“. The disk space will probably not change a lot within very short times, so the resolution can be set to 1/10.

In the panel settings, a meaningful title can be added. Since the usage will always between 0% and 100%, the minimum and maximum of the Y axis can be set too. In field settings, the unit can be set to percent.

Apply the changes and don’t forget to save the dashboard.

Adding alerts

In order to use alerting via email, SMTP needs to be set up properly first. To do so, you’ll need some email account for sending out mails. The following environment variables configure SMTP on grafana:

GF_SMTP_ENABLED: "true" GF_SMTP_HOST: mail.mydomain.com:587 GF_SMTP_USER: mailout@mydomain.com GF_SMTP_PASSWORD: V3ryS3cr3tPassw0rd GF_SMTP_FROM_ADDRESS: mailout@mydomain.com GF_SMTP_FROM_NAME: "Monitoring" GF_SMTP_EHLO_IDENTITY: mon.mydomain.com GF_SMTP_STARTTLS_POLICY: MandatoryStartTLS

Grafana supports a nice feature to send images of graphs along with alerts. This requires the set up of an image renderer which can be run as separate docker container:

services:

grafana:

image: grafana/grafana:7.4.3

networks:

- services

[...]

environment:

[...]

GF_RENDERING_SERVER_URL: http://grafana-image-renderer:8081/render

GF_RENDERING_CALLBACK_URL: http://grafana:3000/

grafana-image-renderer:

image: grafana/grafana-image-renderer:2.0.1

networks:

- services

Now redeploy grafana, go to Alerting -> Notification channels and add a new email notification channel. Use the „Test“ button to verify email functionality. After that, an alert can be added to the visualization created before.